An embarrassment of assets

When complementary assets collide

Hey team,

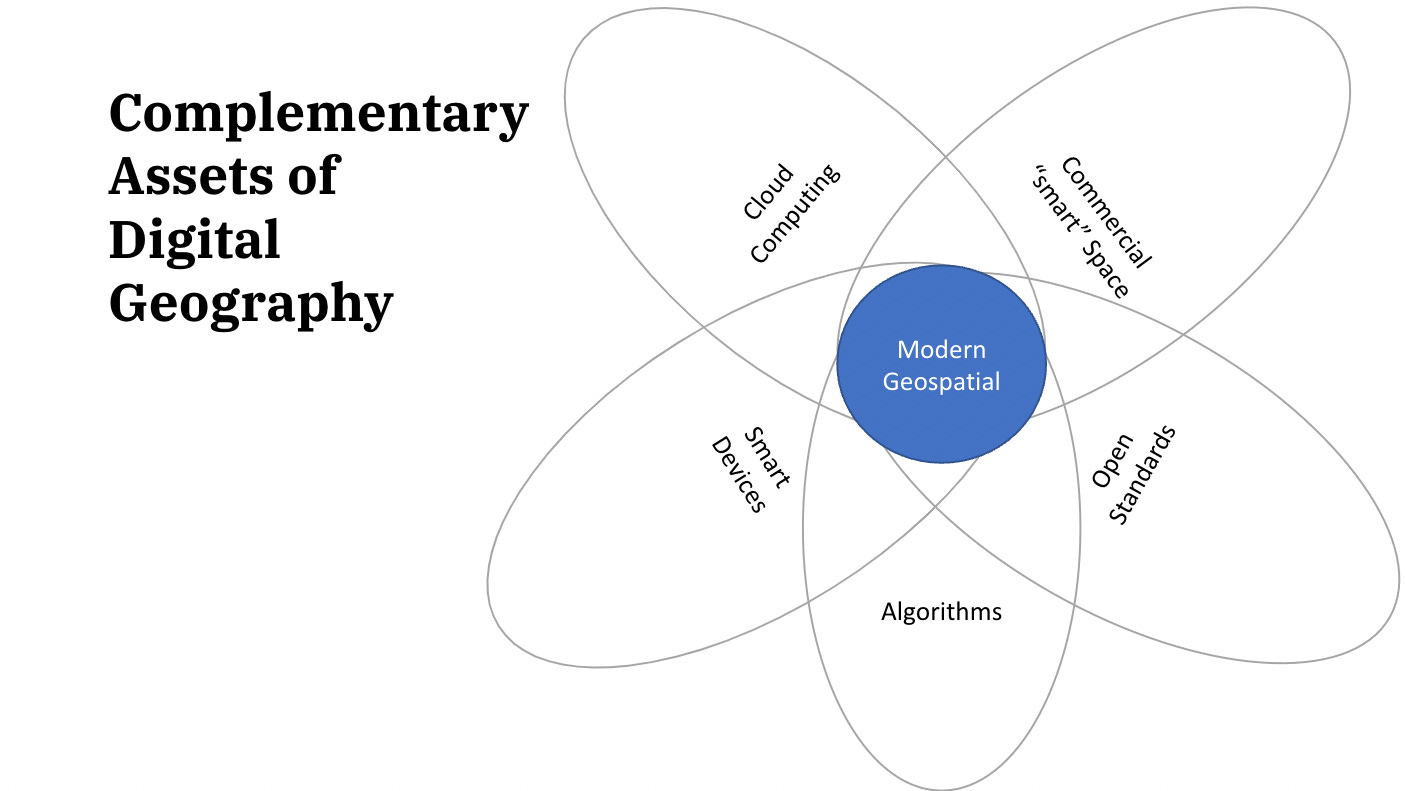

In our last discussion, we covered complementary assets. I outlined a few assets I see which are directly relevant to modern geospatial:

Commercial space

The cloud

Openness

Algorithms

Smart devices

Even in these, I am conflating things terribly. Obviously, the Smart devices category rather awkwardly includes GPS, which is a satellite thing. So, GPS might also be somewhat related to the commercial space category. When I think about GPS here, I think about its consumption more than its creation. But still, if we were to consider these as a Venn diagram, there is evidently some overlap. So, today, let’s talk about overlaps in assets, especially in the five assets above.

Evolving Assets

I talked a little about each of these assets in our last discussion. We could go into much more depth on each (and I reserve the right to do so later), but today I want to assume that we broadly understand the generalities of what each cover and focus more on what the overlaps might mean for the geospatial sector, more to the point how different assets have evolved to be more complementary for each other.

Space & the cloud

The rise of commercial space has resulted in an Armada of satellite-borne sensory capabilities. These flocks of buzzing and whirring space-bots are tasked with collecting data concerning our planet and its environment. Consider that for a second: more data capture means more data. Every day there is more data being captured. Therefore, there is a need to move that data and store it. This immediately brings into question radio or laser communications methods, ground stations and compute for data storage. In reality, the “smart-space” revolution could not happen without robust compute capabilities. Now we see a commercial willingness to package satellite ground operations with products like Amazon's ground station.

If we think this through a little more we can see that the combination of commercial space, including the development of CubeSat standard, in combination with cloud computing means that a small seed-funded startup company can achieve as much as a complex, expensive government project was once only capable of. This is a functionally different economy. and this can be seen in the number of space startups that are looking for funding.

Note: The market as of July 14th, 2022 is tightening for venture investment generally, but space startups remain “expensive” it is unclear how long this might last.

The cloud and openness

There is a complicated relationship between openness and the cloud. Firstly, I have lazily conflated open source code, open data, and open standards. People can and should take issue with this. Books have been written on each of these subjects independently. But I do find lumping these together into “openness” useful for convenience, and philosophically these all notionally point in the same direction: towards open innovation. The key consideration here is that doing things in the open builds a community which can help with idea generation and cross-functional work.

In particular, open standards allow multiple data sources to be aggregated and compared. In no uncertain terms, open standards open minds.

Open standards open minds

For this discussion, it is worth considering that sensory capacity is stored in the cloud. if that data is unavailable to the public in a consumable manner, there would be little commercial value. Therefore publishing data in an open manner is very valuable. Beyond this, numerous government-funded scientific missions have begun to publish data on the cloud in an open manner (see AWS open data program for example.) this makes public data easily accessible, increasing its value to the community at large.

There is a more acrimonious relationship between the open source software community and the cloud, where certain open source products have been subsumed into the cloud product mix and offered as a managed service. This becomes antithetical to the open source credo and turns work which is often undertaken pro-bono and in good faith by open source developers into a cloud profit centre. This activity is deeply disingenuous, if not actually illegal.

Algorithms and the cloud

Again, data must be stored, the cloud is key for this activity. But data is a raw product. To create value from data, often an algorithm must be applied. For the sake of this discussion, an algorithm is a repeatable process that is usually computational. The cloud provides various means by which to undertake algorithms. Primarily we should simply consider that easier access to data means a greater need for algorithms. Indeed, one could argue that open standards also increase the utility of any algorithm. So open data stored on the cloud in a format adhering to an open standard become increasingly easy to use.

Smart devices, the cloud, and algorithms

Smart devices provide a situational focus: they know where they are. They also provide connectivity. Connectivity means devices are able to access resources (websites and data) on the cloud and location can be an input for an algorithm. Because websites and apps are powered by the cloud, smart devices would be very limited in their utility without cloud resources. Again, the cloud has provided the basic infrastructure for technology startups to build and test businesses quickly and inexpensively.

This interaction will only become more obvious as we move towards an “augmented future.” Clearly, augmented reality will at some point overtake the utility of flat, planimetric maps. As we see our cartographic experiences change in perspective and become somewhat immersive, these data flows will be fed by the cloud and connectivity. The experience will be modern-cartographic but entirely enabled by complementary assets.

Modern geospatial

There is some disagreement on how to describe ourselves these days. And to be honest, I don’t really care that much anymore (as long as everyone does what I say, of course ;) .) However, the commonality I do see is a realization that we can do new and interesting things. This is the key message of today’s discussion. Complementary assets have provided us with a series of new capabilities:

Each of those complementary assets discussed last time exists independently from “geospatial”

Each of those assets existed independently in some form 10 years ago

Each of those assets has evolved in their own right

In a Venn diagram, each of those assets has evolved to overlap each other

Modern geospatial is what I call the intersection of them all.

Modern geospatial did not exist ten years ago. In there is one central insight I can provide in this series of discussions it is this. Though in concept (in research labs and well-funded government departments), everything we can do now was theoretically possible a decade ago, the costs and effort involved were astonishing.

Today, that is not the case.

Today, we have a series of absolutely new capabilities.

Today, geospatial is a new market, an unsettled market.

I would love to hear any comments you might have:

Next time, we will talk about S-curves.

After reading thus far (oldest to latest), it seems like you are saying that the function has changed but form hasn't caught up yet. Is this understanding somewhat accurate? If so, this is brewing in my head for now...

If form must follow function (big IF), people bringing in the many new forms need to see that the function has indeed changed and that the critical mass for that change to be perceptible is already met. I wonder if that's actually the case.

If the leading indicator (function in this case) didn't rock the boat yet, the lagging indicator cannot do much. Perhaps we need to gauge: a) Are there cohorts that have sensed that the function has changed (as in, who and where are those who noticed the leading indicator changing), b) Are they anywhere close to the tipping point to help with ease of adoption (least energy/effort), and c) If we cannot answer a and b, how should we probe and offer emergent practices to make it easy to answer a and b.

If function follows form (seems to be the case more often than not), perhaps the critical mass is yet to be met because the "latent" needs are ineffable at best or unmanifested.

And if we are talking about complex environments, cause and effect are either inapplicable or too far apart in space and time to retrace. Complex systems seem to have certain propensities that are kinda palpable. Perhaps trying to find the direction and dynamics of the flow streams might enable us to see and manage the interfaces as threats and opportunities surface.

Perhaps you've already addressed this in the other posts. Moving on :)